In every Hackathon, we witness people working around the clock to develop on an idea that's unprecedented in all respects.

Hackmotion, an app developed during the Hackathon conducted by WACHacks in association with HackerEarth, is a breathtaking manifestation of the same.

Read on to know more about this app.

What is Hackmotion?

Hackmotion is an app that solves problems related to stress. It allows users to talk and have a friendly conversation with their phone. Along with this, it tracks users’ emotions and conversations in a journal format.

This app identifies the emotions that students commonly experience. It is designed to help them improve their social well-being, helping them be expressive through journaling.

Technologies/platforms/languages

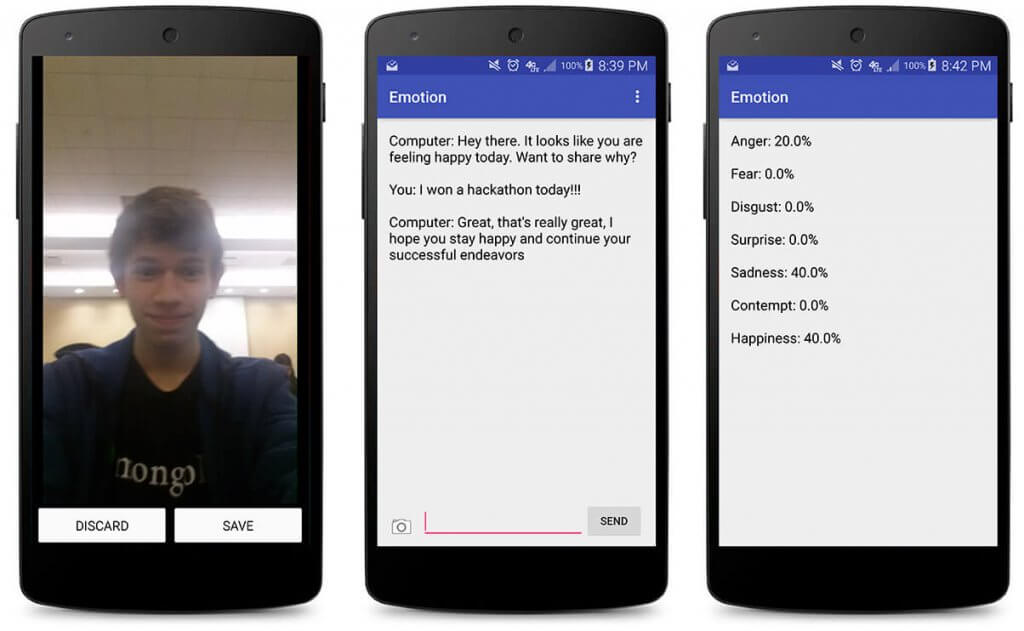

When the app is opened, the users are introduced to a friendly UI where they get an option to take a picture. Users can click on the camera icon to get the picture clicked. The phone processes this image using Microsoft Face API. Depending on the image, two types of APIs are then called:

Challenges

Here are some of the challenges that the team faced while building this application:

Some of the future plans of the project creators Brian Cherin and Kaushik Prakash are

Hackmotion, an app developed during the Hackathon conducted by WACHacks in association with HackerEarth, is a breathtaking manifestation of the same.

Read on to know more about this app.

What is Hackmotion?

Hackmotion is an app that solves problems related to stress. It allows users to talk and have a friendly conversation with their phone. Along with this, it tracks users’ emotions and conversations in a journal format.

This app identifies the emotions that students commonly experience. It is designed to help them improve their social well-being, helping them be expressive through journaling.

Technologies/platforms/languages

- Android Studio: To develop and test the app.

- The Microsoft Face API: To analyze and detect faces in the picture taken

- Microsoft’s Emotion API: To determine what emotion the person in the picture is feeling

- Clarifai API: To process the image in the picture if there is no face

- Java: For internal logic

- XML: For layouts

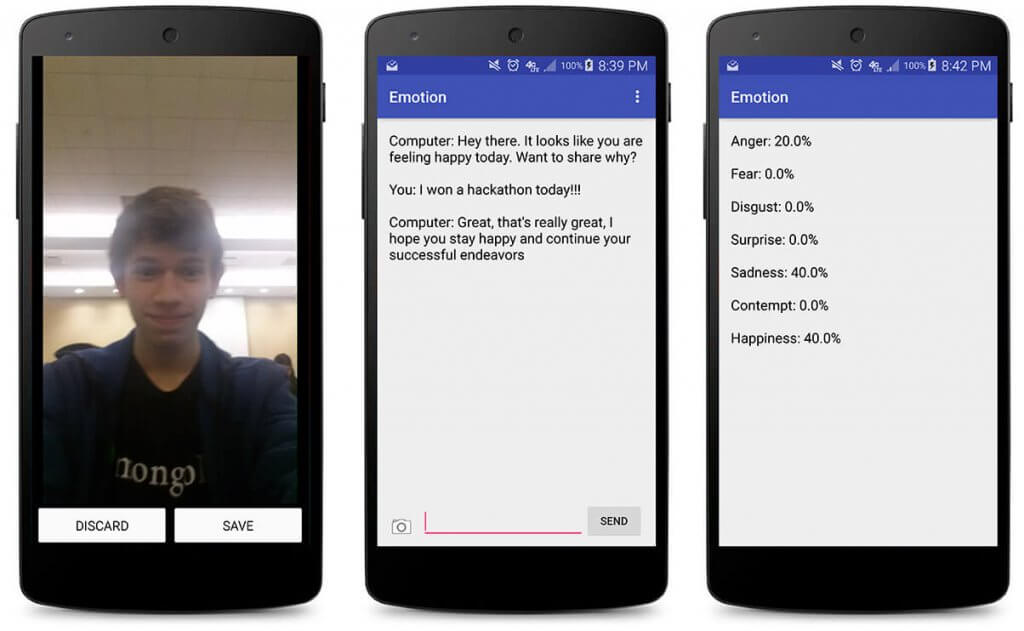

When the app is opened, the users are introduced to a friendly UI where they get an option to take a picture. Users can click on the camera icon to get the picture clicked. The phone processes this image using Microsoft Face API. Depending on the image, two types of APIs are then called:

- If there is a face in the image, Microsoft Emotions API is called. This API uses an algorithm which analyzes the face and determines what emotion the person is feeling. Once the emotion is recognised, the phone starts talking to the user according to his or her mood.

- If there isn’t any face in the image, Clarifai API is called. This API then determines what significant object is there in the picture. For example, if the user takes a picture of the leftover food, Clarifai will first recognise the food as an object and will then determine its type. For accuracy, questions related to the picture will be asked to the user.The conversation will start once the correct emotion is identified.

Challenges

Here are some of the challenges that the team faced while building this application:

- Making Microsoft and Face API’s to work in harmony

- Creating the algorithm that analyzes the face

- Making sure that Clarifai API is called when no face is detected

Some of the future plans of the project creators Brian Cherin and Kaushik Prakash are

- Efficiency and accuracy of the app will be improved.

- Frequency of each emotion will be displayed in a form of bar or line graph.

- The conversational flow of the chat/journal portion will be improved by making it display the specific time and some notes that the user has.